Interview

Big Tech's Mind Reading Ambitions Must Be Regulated, Says Expert

Marcello Ienca, a senior researcher at the health, sciences, and technology department of ETH Zurich, spoke to Calcalist about the dangers of the neuro-technological era

13:4018.02.20

Have you ever felt like Facebook or Google are reading your mind? The concept may not be as far-fetched as you may think. In fact, many tech companies are hard at work on technologies that can do just that. According to Marcello Ienca, 31, a senior researcher at the health, sciences, and technology department of the Swiss Federal Institute of Technology in Zurich (ETH Zurich), the consequences of these developments could be dire.

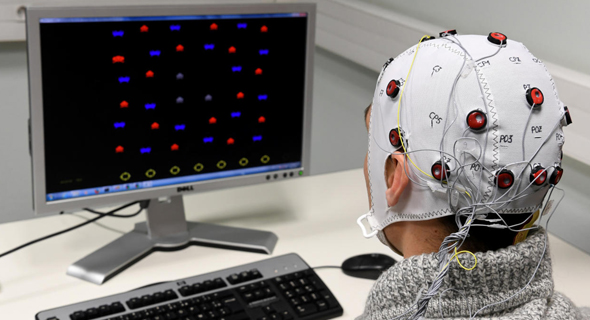

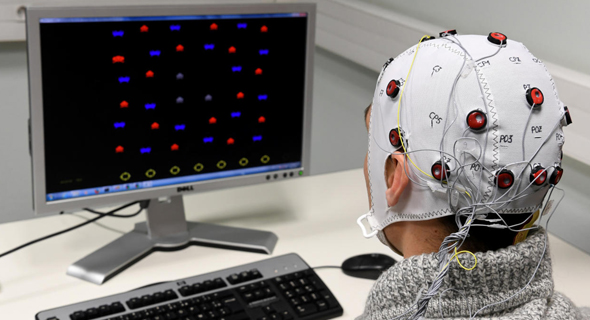

Neuro-technologies (illustration). Photo: AFP

Neuro-technologies (illustration). Photo: AFP

In July, relentless entrepreneur Elon Musk announced a breakthrough in what he called an effort to save humanity from machine-learning technologies—using technology. Musk’s Neuralink Corp. presented its latest development, an array of tiny electrodes (between 4 micrometers and 6 micrometers in width, less than the width of a human hair) that can be used to pick up brain activity related to speech, sight, hearing, and movement. The device can absorb information from as well as send it to the brain and has already been tested on a monkey. Human testing is planned for later this year, according to Musk.

Neuro-technologies (illustration). Photo: AFP

Neuro-technologies (illustration). Photo: AFP Unlike some of Musk’s other futuristic ventures, this type of technology is already in use. In China, for example, state-owned companies have been known to use neuro-technological wearables to monitor their employees’ brain activity and levels of concentration in an effort to increase productivity. More recent reports show some schools in China have begun using similar devices, developed by Somerville, Massachusetts-based company BrainCo Inc. on their students.

What is happening in China is likely to become more commonplace soon enough, as Facebook is already hard at work on a brain-machine interface (BMI) that can read minds.

Using a wearable device, Facebook aims to decrypt the human brain’s language center and identify the words that pop into a user’s head. The system is supposed to identify up to 100 words per minute. The company reportedly had a 61% success rate in extracting spoken words from brain waves.

When Facebook’s vision of connecting people to their online feeds directly through their brains becomes a reality, the company will gain direct access to the brain activity of millions of people, Ienca warned in a recent interview with Calcalist. It could then, for example, recognize the P300 waves that appear in the brain if it identifies something interesting, thus gaining insights into our preferences, political stances, religious beliefs, and sexual orientation, he said. “This type of information can put you behind bars in some countries,” he added.

This is just one of many frightful scenarios made possible with the dawn of this new technological age, as BMI and brain-computer interface (BCI) technologies are being developed by both governments and private companies, for commercial, medical, and security purposes. Ienca’s focus is on where the line needs to be drawn to protect our brains against unwanted mind-reading and manipulation. To this end, Ienca is currently working with the OECD (Organization for Economic Cooperation and Development) to implement clear guidelines for the use of neuro-technologies.

A lot of companies are building their business models around neuro-technology, and these technologies have a vast potential to improve the lives of people with disabilities, Ienca said. It is important to maintain the field’s accelerated growth but at the same time make sure this growth is based on responsible innovation, he added. That is why he is urging governments to create a legal framework for the use of neuro-technologies that would ensure that users’ rights are protected.

Some of the technology's problematic aspects are already beginning to surface, making regulation essential and urgent, Ienca said. As an example, Ienca points to people with mobility disabilities who use neuro-technologies to operate aids such as prosthetic limbs or wheelchairs. Many of these users admitted to feeling unsure whether they were the ones deciding to perform a certain action, or said they felt as if they decided to perform it, yet were unsure they had actually wanted to, he said. Another, more significant problem has to do with deep brain stimulation (DBS), a treatment that has been proven effective for people suffering from depression or Parkinson's disease but has also been shown to potentially cause an identity crisis or a change in personality in some patients.

These technologies show a lot of promise, but they are also very transformative and can deeply affect a person’s perception of their own identity, Ienca said. Some patients report feeling different when using such technology, while others claim to only feel like themselves when it is active, he added. According to Ienca, this is a unique case in which one’s sense of personal identity is left in the hands of the private companies that manufacture and operate DBS, CBI and MBI devices. The latest generation of DBS devices includes a closed-loop control system, which means machine-learning algorithms are responsible for the level of electrical stimulation the patient’s brain receives. “This raises philosophical issues concerning self-identity and the ability to alter cognitive functions,” Ienca said.

But it is not purely a philosophical question, as these technologies also have very concrete, technical, and legal implications. Technology has, in many ways, liquidated privacy and made people more accustomed to its constant deterioration. In this day and age, supermarkets know exactly what we buy, credit card companies know where we like to hang out, and social media networks know us better than our own friends. The age of neuro-technologies, however, is exposing us to new forms of privacy infringements that we have never seen before, according to Ienca. For now, the ability to read someone’s mind is still very limited, but very sensitive information can already be derived from brain waves, for example for early detection of Alzheimer's disease in a seemingly cognitively healthy individual, he said. If leaked to an insurance company, this type of information could lead to policy discrimination or to the person losing their job if knowledge reached their employer, he added.

It is important to remember, Ienca said, that the data gathered by neuro-devices does not vanish into thin air, but is in fact stored and saved. Even if our ability to decipher most of it is limited for now, in 20 years, as software advances, we could read more and more off of it, he added. That is why he thinks it is imperative to prepare now for the day when our minds can be read.

Technological advancement is always ahead of regulation, that is only natural and not necessarily a bad thing, according to Ienca. Technology should move as quickly as possible in order to offer solutions, but it is important to remember it has side effects that can help bad agents thrive, he said.

According to Ienca, the most important tool in addressing threats is to develop awareness and demand transparency. “People are willing to trade their privacy for good service, but in order for them to make a decision they have to be equipped with reliable information,” he said. Many neuro-technology companies exaggerate their marketing claims, promising to reduce stress, boost learning, or enhance memory, but there is no evidence that these claims are true, he said. When unrealistic expectations are encouraged, he said, people might be more willing to share private information in exchange for what they believe would be an enormous reward. People thus need to be informed as to the true capabilities of the technology as well as where their information is stored, who has access to it, and whether it might be sold to a third party, he explained.

Related stories:

- This Is a Period of Abundance, But a Strong Economy Is not the Same as Healthy, Says Economist

- Amazon Hurts Everyone in E-Commerce, Says Bringg CEO

- Looking Death in the Eye: Why Your Brain Is Convinced You’ll Live Forever

In 2017, Ienca partnered with law researcher Roberto Andorno to outline four new neuro-specific human rights they believe should guide any legislation and ruling in the industry. These four fundamental rights, according to Ienca and Andorno, are the right to cognitive liberty, which gives people control of whether or not neuro-technological tools can be used on their brains; the right to mental privacy, which gives people control over what information can be collected from their brains and who can use it; the right to mental integrity, which protects people from physical or psychological harm that could be caused by neuro-technology; and the right to psychological continuity, offering protection against outside influences on a person’s sense of self.

These four rights are meant to give people broad protection in a field that is currently completely unregulated with no established standards, Ienca said.