Hackers turn to AI for cyber attacks, but unwittingly reveal their plans

OpenAI report highlights both the threats and the opportunities of AI in cybercrime.

Since generative artificial intelligence (GenAI) tools entered our lives, led by OpenAI's ChatGPT, concerns have emerged about how hostile actors might exploit them to plan and execute more sophisticated cyberattacks. However, an unexpected outcome is that these actors' use of modern chatbots also provides important insights into their methods, tools, and activities.

A report published last week by OpenAI revealed that the platform’s monitoring of attackers offers "unique insights" into their operations, including the infrastructure and capabilities they are developing, often before these become operational. OpenAI has been able to track new work methods and tools that attackers are seeking to implement.

In recent months, OpenAI has published several reports on the misuse of ChatGPT by malicious entities. These range from attempts by Iranian actors to interfere with U.S. elections to efforts by cybercriminals using the platform to research targets, improve attack tools, and develop social engineering techniques.

According to OpenAI, more than 20 instances of cyberattacks and influence operations using its platform have been uncovered since the beginning of the year.

One such case involved a group, likely from China, called SweetSpecter, which used ChatGPT for intelligence purposes, vulnerability research, code writing, and to plan a phishing attack targeting OpenAI employees. The attack was thwarted by existing security measures. SweetSpecter's activity on the platform was flagged after a warning from a reliable source, enabling OpenAI to identify the group's accounts and monitor its operations. "Throughout the investigation, our security teams used ChatGPT to analyze, sort, translate, and summarize interactions with hostile accounts," OpenAI reported.

Similarly, OpenAI identified how attackers used its tools to research vulnerabilities, such as potential security loopholes in a well-known car manufacturer’s infrastructure and commercial-scale SMS services. In another instance, an Iranian-linked group, CyberAv3ngers, sought to exploit ChatGPT to gather intelligence, identify vulnerabilities, and fix bugs in malicious code targeting infrastructure in the U.S., Israel, and Ireland.

CyberAv3ngers' activities included attempts to retrieve default usernames and passwords for infrastructure-related hardware and requests for help with writing and improving malicious code. These activities allowed OpenAI to understand which technologies the attackers aimed to exploit.

A further example involved STORM-0817, another Iranian-linked group, which used OpenAI's models to improve malware aimed at extracting data from social media accounts, including a test on an Iranian journalist critical of the government. OpenAI’s report claimed this provided insights into the group’s future plans and capabilities, which were not yet fully operational.

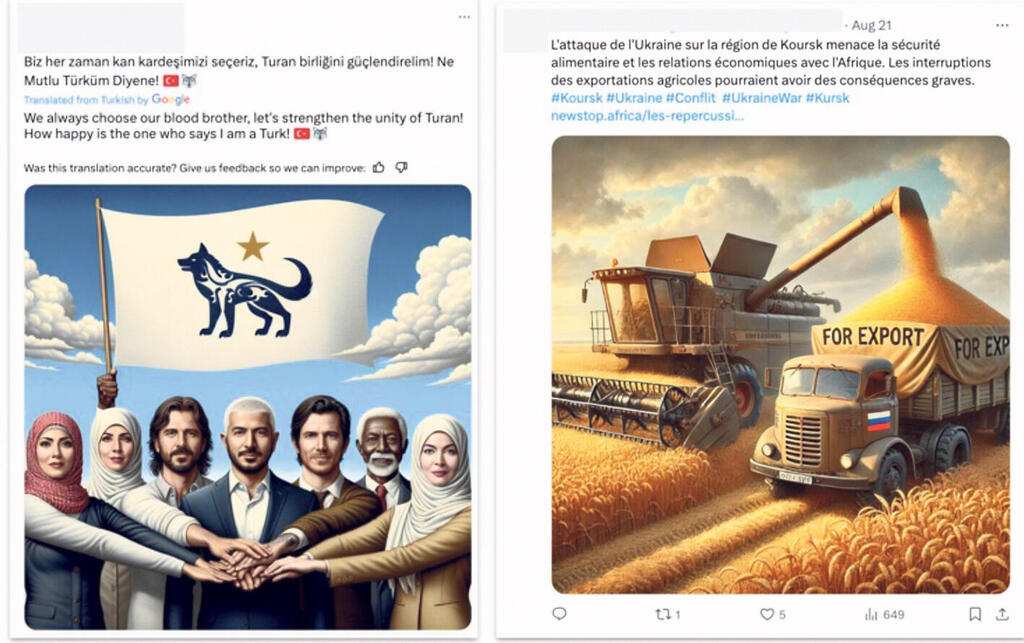

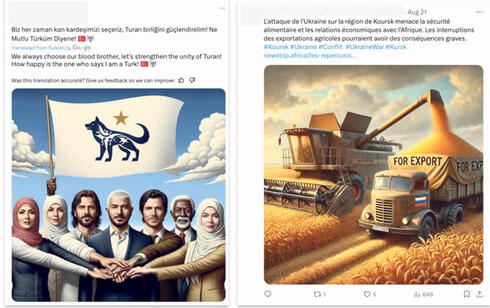

In addition to exposing cyberattacks, OpenAI also uncovered influence operations. One report highlighted a network of 150 accounts operating from the U.S. that used ChatGPT to generate AI-created posts and comments on social media platforms like Facebook and X. "The network's reliance on AI made it extremely vulnerable to disruption," OpenAI noted. When the network was shut down, its social media accounts ceased operating during critical election campaigns in the EU, UK, and France.

While OpenAI presents these examples of cyber and influence operations that its models helped disrupt, it downplays the extent to which GenAI can assist malicious actors. In some cases, OpenAI suggested that the information sought by attackers could easily have been found using search engines. The company also emphasized that GenAI models have not introduced new types of threats, but rather have made it faster and cheaper to execute traditional cyberattacks, such as by automating the creation of fake social media profiles.

However, the report acknowledges that AI tools like ChatGPT offer companies like OpenAI greater opportunities to detect and halt malicious activity at an early stage. AI can reduce the need for larger, more skilled workforces to carry out complex operations and allows more efficient detection of attacks.

Despite these successes, it’s important to view OpenAI’s claims with some caution. The company has an interest in portraying its models as safe and suggesting that it can detect misuse. But OpenAI’s report does not address the risk that more sophisticated actors could evade detection. Nor does it fully consider how caught attackers might refine their techniques and return with more advanced methods.

Moreover, while OpenAI has demonstrated significant detection capabilities, other platforms may lack similar safeguards. Some malicious actors have already migrated to alternative AI models, and the rise of open-source GenAI models—many of which can be run locally without external oversight—poses additional challenges. These tools, when operated without supervision, provide attackers with powerful resources that could be harder to track and prevent in the future.