US investigation against AI giants can’t keep up with pace of development

Microsoft, NVIDIA, and OpenAI face regulatory heat as US agencies divide oversight responsibilities.

Last week, the U.S. Department of Justice (DOJ) and the Federal Trade Commission (FTC) agreed on a division of labor for investigating major AI companies: Microsoft, OpenAI, and NVIDIA. The DOJ will investigate NVIDIA, while the FTC will lead the investigation of OpenAI and Microsoft, according to a report by The New York Times.

On the surface, this appears to be a regulatory step with no wider significance. After all, antitrust investigations have yet to begin. However, the last time these agencies made such a move, in 2019 to divide responsibility for investigating Apple, Amazon, Google, and Meta, it resulted in severe indictments for antitrust law violations. But what is significant is that regulators are now signaling that they take the potential dangers of modern AI very seriously. The question is whether the regulatory process will be swift enough to keep pace with developments in the field.

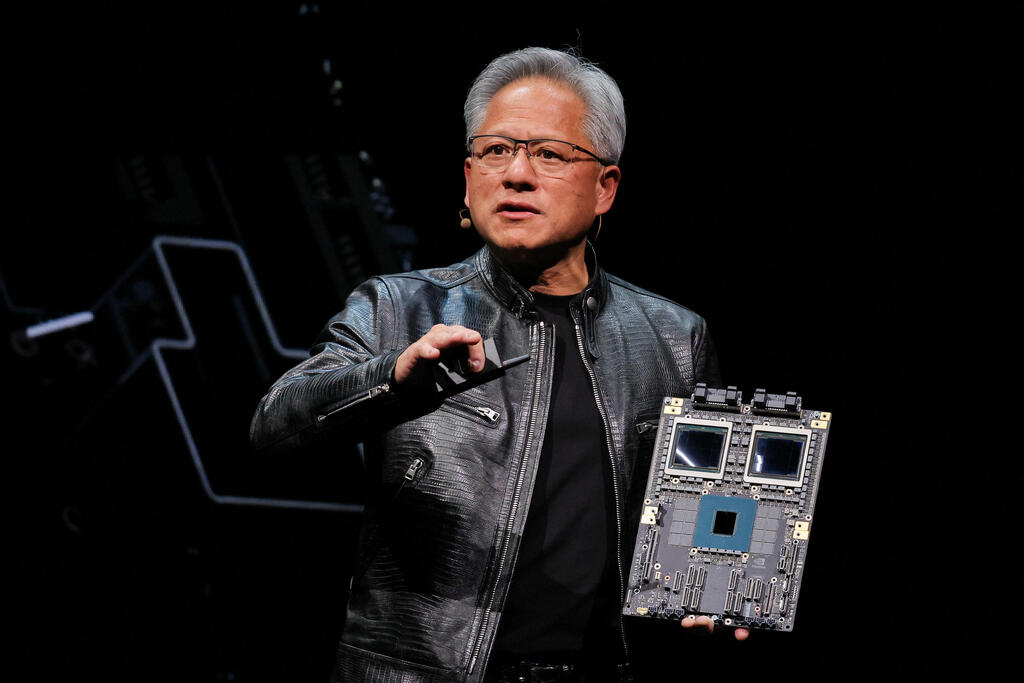

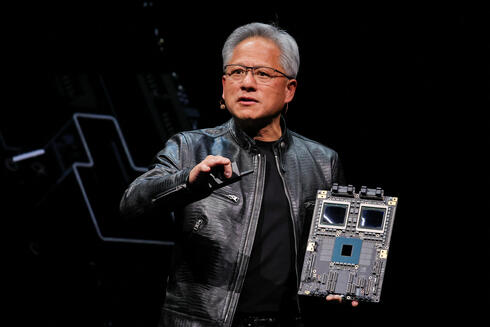

It's no coincidence that the two publicly traded companies with the highest market valuations in the world are also leading in the Generative AI market. Microsoft, with a market value of $3.15 trillion, has invested $13 billion in OpenAI and closely collaborates with the company, leading the market in integrating AI capabilities into its products. NVIDIA, with a market value of $3.01 trillion, pushed Apple out of second place last week and has shown unprecedented growth on Wall Street over the past year due to its near-total dominance in the AI chip market. While OpenAI is not a publicly traded company, it is the driving force behind the current AI revolution.

Together, these three companies can be considered the holy trinity of the GenAI market, with each playing a distinct and crucial role. OpenAI develops models and services that many others rush to imitate; Microsoft demonstrates how to integrate them into a variety of products; and NVIDIA provides the immense processing power needed to train and run these models. Until now, the companies have avoided significant antitrust scrutiny for these activities.

In July 2022, the FTC did launch an investigation into whether OpenAI's data collection practices harm customers, and in January, it began examining the collaboration between OpenAI and Microsoft, as well as Google and Amazon's investments in Anthropic. However, these are relatively limited investigations, and U.S. AI regulation lags behind the European Union, which has already completed extensive AI legislation.

The division of responsibilities indicates that regulators are preparing to escalate and initiate a broad antitrust investigation. According to The New York Times, the DOJ's investigation into NVIDIA could focus on how its software locks users into using its chips and how it distributes these chips to customers. The FTC's investigation could focus on how Microsoft has integrated OpenAI's models into Bing, Word, PowerPoint, and other products, as well as how Microsoft's investment in OpenAI (which gave it a 49% stake in the company) and their close collaboration impact technological development.

This is still a very early stage - the agreement itself will be announced in the coming days. However, the division of responsibilities is a significant signal of intent, especially in light of past experience. In 2019, the agencies reached a similar agreement, with the DOJ taking responsibility for Google and Apple and the FTC for Meta and Amazon. This resulted in extensive indictments against the companies. A victory for the government could lead to a loss of tens of billions of dollars in annual revenue for the companies, possibly even leading to their breakup.

If the current agreement leads to a similar outcome, we may see serious indictments against NVIDIA, Microsoft, and OpenAI, which could, for example, require the dissolution of the alliance between Microsoft and OpenAI on the grounds that it is effectively equivalent to an acquisition. However, we also learned from the previous agreement that these proceedings take time - too much time. The lawsuit against Apple was filed in March and the one against Amazon in September. Both are still far from their trials. The lawsuit against Meta was filed at the end of 2020 (and was initially dismissed and then refiled), and the trial has yet to begin. Closing arguments in Google's lawsuit (filed in 2020) were heard only last month, and no decision has been made, and a lengthy appeals process is expected. Meanwhile, the companies continue to operate nearly if not completely as usual.

At the same time, the cumbersome and slow regulatory/legal system is being used to regulate a field that is developing at an extraordinary, possibly unprecedented, speed, where almost every month brings new developments and capabilities to the market. What chance is there to execute effective or relevant regulation when what regulators are dealing with today - which will be realized in only a few years - may become irrelevant by the next quarter? Already, one could say that regulators are doing too little and too late. If the goal is to truly regulate the field of artificial intelligence, prevent the misuse of market dominance, and impose real oversight on the development and deployment of potentially destructive products, much more needs to be done and much faster.

EU launches AI Office

The European Union, which is far ahead of the U.S. in AI regulations, last month announced the establishment of an official AI Office. The opening of the office comes following a month-long process during which EU member states signed and ratified the AI legislation. The office will oversee the implementation of regulations in AI development, ahead of the AI law coming into effect on June 16. According to a report by Euronews, Lucilla Sioli, Director for AI at the European Commission, will lead the new office.

The AI Board, which will include regulators from each of the 27 national regulators, will assist the AI Office in regulatory processes and is expected to hold its inaugural meeting this month. According to a report by TechCrunch, the office will hold five divisions and employ over 140 people, including technical experts, lawyers, political scientists, and economists, and expand in the coming years as needed. The regulations and compliance unit will liaise with member states and coordinate regulatory enforcement actions, ensure that AI law is implemented within the EU, and handle investigations, law violations, and punitive actions. The AI Safety unit will identify systemic risks existing in general AI models and develop, among other things, risk assessment methods.

Another division will be focused on “AI for Social Good” and will plan and execute international projects, relating to climate simulations, cancer diagnosis, and urban development solutions. The “Excellence in AI and Robotics” unit will support and fund AI R&D. The “AI Innovation and Policy Coordination” unit will oversee the implementation of EU policies in the field, monitor technological trends and investment, and promote AI applications throughout European Digital Innovation Hubs.

According to Reuters and CNBC, fines for violating the AI law will range from 7.5 million euros or 1.5% of the company's global revenues, to 35 million euros or 7% of global revenues, depending on the type of violations.