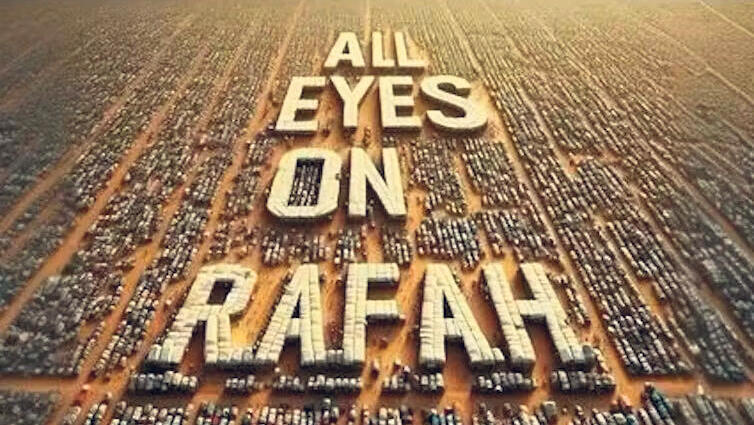

All eyes are not enough to spot a fake image

A viral image supposedly depicting a refugee camp with the subtitle, “All Eyes on Rafah” created with the help of AI ignited a discussion about the ability to recognize realistic but inauthentic images, raising issues regarding the spread of misinformation and lack of tools to identify AI-generated content.

Amid the ongoing debate regarding the IDF operation in Rafah, pro-Palestinian activists launched their own campaign, adopting the simple slogan "All Eyes on Rafah." The campaign utilized two digital strategies: manipulating algorithms and flooding social media. It also utilized digital watermarks to identify the creator of an image and prevent copying or unauthorized use. One method worked, suggesting at least one lesson regarding synthetic outputs of generative AI: the purpose of watermarks works, but watermarks themselves don’t.

Manipulating the algorithm was also quite effective. Content creators, mainly on TikTok, began spreading videos about Rafah, tagging them with unrelated hashtags, often alluding to unrelated stories around celebrities in order to attract interest and get users to watch political content, who are expecting celebrity content. One particularly popular tag was "What did Tom Holland do to Zendaya?," referring to the celebrity couple.

The center of the campaign were generative AI-based images, including the distribution of synthetic images with varying degrees of realism, depicting imagined scenes in Gaza. In the past two weeks, it seems as though there is no corner of the internet not filled with images generated by Dall-E or Midjourney for the pro-Palestinian cause.

The main catalyst for the trend was a viral image ostensibly depicting a refugee camp with the caption "All Eyes on Rafah" in English. This image was actually first created on February 14 by a user named Zila AbKa, who shared the image she created in a Facebook group with a quarter of a million users called “Prompters Malaya.” This is an active group for text-to-image model enthusiasts, where they regularly share hyper-realistic images dealing with topics like cats, family, flowers, or superheroes. Hundreds of images are uploaded to the page daily and users offer each other tips on creating different images or fixing existing ones. When AbKa's original image was published, it included a watermark stating it was "created using AI by Zila AbKa.” At that time, the image had not yet attracted interest outside of the group.

About two weeks ago, something changed. An Instagram user took the image, removed the watermark, resized it to fit Instagram's photo format, and re-uploaded it without credit. The image went viral. "47 million shares across the globe," wrote the manager of the Prompters Malaya group in a post recognizing AbKa as the original creator of the viral image. "The most recognizable image in the world today. Bless you, AbKa, may you be eternally rewarded. Amen," he concluded with a message to the world: "Who said AI-generated images can’t shake the world, and maybe even save lives."

AbKa’s own response did not indicate concern over copyright or the manipulation her image underwent. "Praise be to God," AbKa responded, "even though it was created by AI, the important thing is the goal of opening the eyes of many people... may the victory be theirs in Palestine... and ours as well as humans," she wrote in a post.

AbKa might not care that her watermark was easily removed, but what can be learned from this nonetheless? Quite a lot. In recent months, much has been said about the new era of the "watermark," a sort of standard created by generative AI companies, supposedly to address theft and forgery problems with such models. Initially, watermarks arose from researchers in the field, who argued that companies should be required to automatically add the mark to every image their generators, like Google’s Gemini or OpenAI’s Dall-E, produce.

The goal is for users to immediately recognize an AI image, even if they have never encountered generative AI creations before. The reasoning was simple; today it’s easy to create images with varying degrees of realism and at a low cost, the quantity and quality of images distributed in large quantities on the internet make it difficult for users to distinguish between real and fake images, contributing to the knowledge crisis already present in the digital age. According to various estimates, about 34 million images are created daily using image generators, a total of 15 billion images since these products were launched about two years ago.

As time passed with no significant action from AI companies, it became apparent that they were mainly focused on developing the products, not dedicating time and resources to safety and user protection. Accordingly, and in light of the rising popularity of these tools, the pressure from lawmakers increased. In November, the Biden administration mandated watermarks with an executive order, as well as EU legislation launched in May requiring all companies to add a watermark to content generated by AI.

Watermarks were launched amidst a mini arms race, with each company touting its solution: a pixel-embedded watermark, a watermark embedded in a symbol containing metadata about the creators, a watermark as a hidden pattern in text, an watermark invisible to the human eye but visible when you click on the image. It quickly became apparent, as evident from the "All Eyes on Rafah" image, that these efforts by tech companies have proven insufficient and that removing watermarks is a simple feat.

In February, a computer science professor in Maryland published research showing how he managed to remove or "break" watermarks. He also demonstrated how easily watermarks could be added to real images to further confuse users. In May, another study highlighted the tool's weakness. From this, not only is it clear that companies are not significantly acting to protect users from forgeries and the spread of false information on the internet, but we also understand how important watermarks are. As long as the watermark was present on the image, its virality was limited as people do not want to be reminded that an image is inauthentic or created by a machine. This means that even if a watermark doesn’t work, the idea behind it is correct: marking images created by generative AI slows the spread of forgeries.

It doesn’t matter if AbKa’s intentions are pure or if other users recognize a fake image; when distributing images on the internet and hoping for their virality, it needs to be immediately obvious to anyone when an image is not real and generated by AI. As long as there is no watermark, many will be unable to determine if the image is real or not. We clearly see the impact of this. For example, AbKa’s image was reported upon in the following manner, by the most senior news outlets in the world, who avoided definitively stating that the image was AI-generated: "It is likely that this image was created by artificial intelligence," wrote The Washington Post; "What appears to be an AI-generated image," wrote The New York Times.